In this post, I describe a prototype of Intelligent CAD System based on AI, which I’ve developed for the Future Prototyping Exhibition (Melbourne School of Design, March 2020). The prototype allows the designer to interact with AI through sketches, and to get feedback in the form of photorealistic images. Despite the toy-like features of the system, its development allowed to investigate the potential of AI in design and to set key objectives for further developments of the research in this field.

In this post, I describe a prototype of Intelligent CAD System based on AI, which I’ve developed for the Future Prototyping Exhibition (Melbourne School of Design, March 2020). The prototype allows the designer to interact with AI through sketches, and to get feedback in the form of photorealistic images. Despite the toy-like features of the system, its development allowed to investigate the potential of AI in design and to set key objectives for further developments of the research in this field.

Introduction

What I draw is different from what I picture in my mind, as well as what I say is different from what I think. Any translation process alters the nature of the information.

If the objective is preserving the information, this can clearly be considered as a drawback. However, in design, as well as in any other creative activity, transformations of information are not only harmless but also beneficial: they allow to reinterpret the initial information in new ways. For example, by sketching a design idea on paper, the designer is translating information existing only in his mind into a graphical form. Then, by observing the sketch he may discover new properties emerging from the translation process. In other words, as observed by Goel [1], sketching allows the designer to have a conversation with himself.

The reason why sketching is so powerful in the development of design ideas is that it activates multiple processes of translations – from mind to paper, and vice versa – which continuously expand and redefine the meanings of an initial design idea.

Current computational design tools do not support this process. Rather, they have introduced a different form of design exploration which is highly influenced by the way the designer commands the machine. Software interfaces have dramatically changed the way we think while designing. For instance, the commands of 3D modelling software, as well as the available geometric entities, have become the grammar for the generation and transformation of architectural forms. This holds true not only within the context of the software but also when the designer turns off the computer and goes back to paper. In a way, computational tools have transformed the language of design, thus changing our mental processes, rather than augmenting them.

This has had several implications in architecture, the most important of which is the reduction of design considerations to form. This is caused by the impossibility to control, through software, the multiple dimensionalities of a design problem, and since we think through software, we are also inclined to ignore them. The same applies to structural design, where computational tools (such as optimisation algorithms) often reduce the complexity of the design problem to considerations about structural performance.

In summary, although technology has opened a new space of design possibilities, it has made designers overly dependent on computers and less prone to engage in the creative exploration of design ideas. In particular, the use of computers instead of paper as a means for design exploration enhances the control over some design aspects but also downsizes the designer creative potential.

There is a need for new computational design tools that assist designers in the most crucial stage of design development: idea generation. These tools must adapt to the designer natural way of exploring and expanding ideas, rather than impose thinking patterns upon them. To do so, they should act as design partners, and thus be endowed with a form of intelligence.

An AI-based system for design exploration

‘Sketches Of Thought’ is a prototype of Intelligent CAD system (Duffy, Persidis [2]) which allows designers to explore multiple instantiations of a design idea by sketching. At the core of the system, there is an AI model which translates hand-drawn sketches into photorealistic architectural/structural images. The role of the AI is augmenting the natural process of translation and reinterpretation occurring during the development of design ideas by means of sketches.

The dialogue with the system happens through a visual interface whereby the designer communicates by sketching directly on a touchscreen, while the system responds by showing the results of the image translation process. Interaction with the system does not end after a first iteration. Instead, the designer is encouraged to adjust the initial sketch – or even make new sketches – for several times to explore, with the aid of the machine’s feedback, different elaborations of an idea.

Before delving into the details of the system, it is necessary to outline the scope and the hypothesis underlying this experiment.

The scope is testing the capabilities of AI to deal with real-world information about architectural/structural designs, which is scarce and highly unstructured. In this experiment, the information is limited to a dataset of pictures of architectural/structural designs, which comprises only a few hundreds of data and is extremely varied, that is, includes pictures of buildings of different sizes and styles, and in multiple poses.

The experiment assumes that design thinking can be augmented by exchanging graphical information with an AI model at two different levels of abstraction: sketches and photorealistic images. However, sketches alone encompass many levels of abstraction (Lawson [3]). Design thinking also exploits other forms of symbolic representations – such as textural or conceptual – and relies on information related to different knowledge domains not necessarily linked to design.

Having said that, let’s jump into the development of the system. The next section briefly describes how the chosen AI model operates, and the stages followed for the construction of the dataset. Later, I introduce the interface developed to communicate with the AI model and the installation of the system at an exhibition.

AI model training

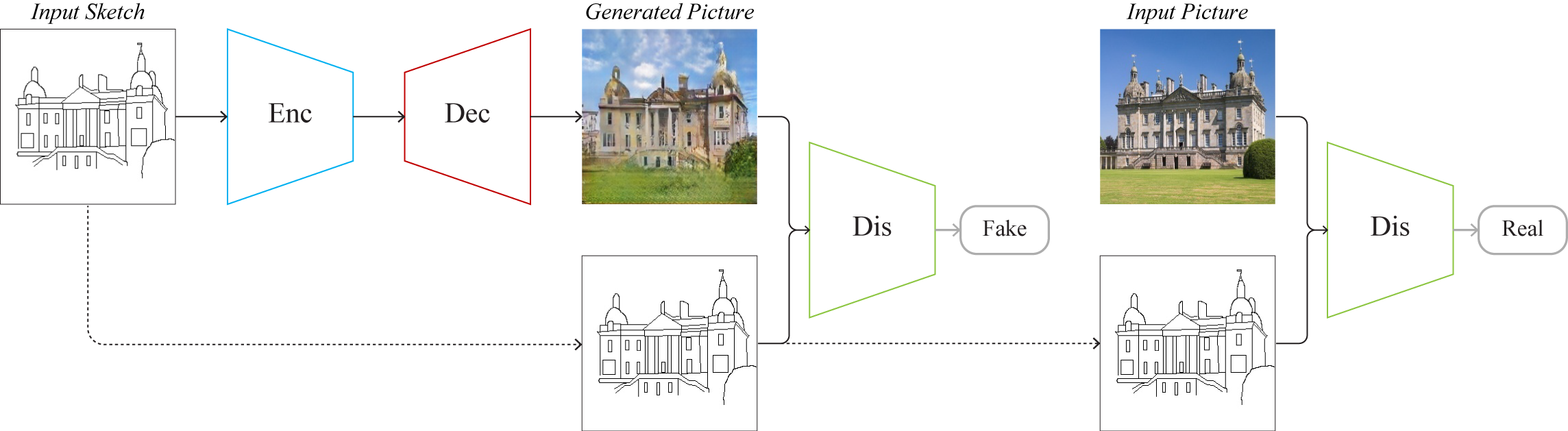

The AI model used in this system is a Generative Adversarial Network (GAN) named pix2pix (Isola, Zhu [4]). This model, as suggested by the name, performs image-to-image translation by mapping pixels of an input image (A) into pixels of an output image (B). The translation process is demanded to a combination of two different Artificial Neural Networks named Encoder and Decoder, which work in sequence to perform two tasks: (1) extract the main visual pattern from image A through the progressive encoding of its information, and (2) expand such visual pattern to generate new ones, which are finally combined to produce image B. This process does not utterly alter the information of image A, but preserves some underlying structures and builds additional information upon them.

Pix2pix was trained using an ad-hoc developed dataset of pictures of architectural/structural designs. For each picture, a sketch was hand-drawn to create a second dataset (details on the construction of the two datasets are provided in the next section). The datasets of pictures and sketches were then combined to form a single dataset consisting of pairs of data samples. Given a pair of images, the AI model was trained to translate the sketch into its corresponding picture. This means that the model had to reconstruct image B as closely as possible starting from image A. To do so, pix2pix implements a third neural network – named Discriminator – whose scope is to measure the degree of similarity between the output of the reconstruction process and the input picture.

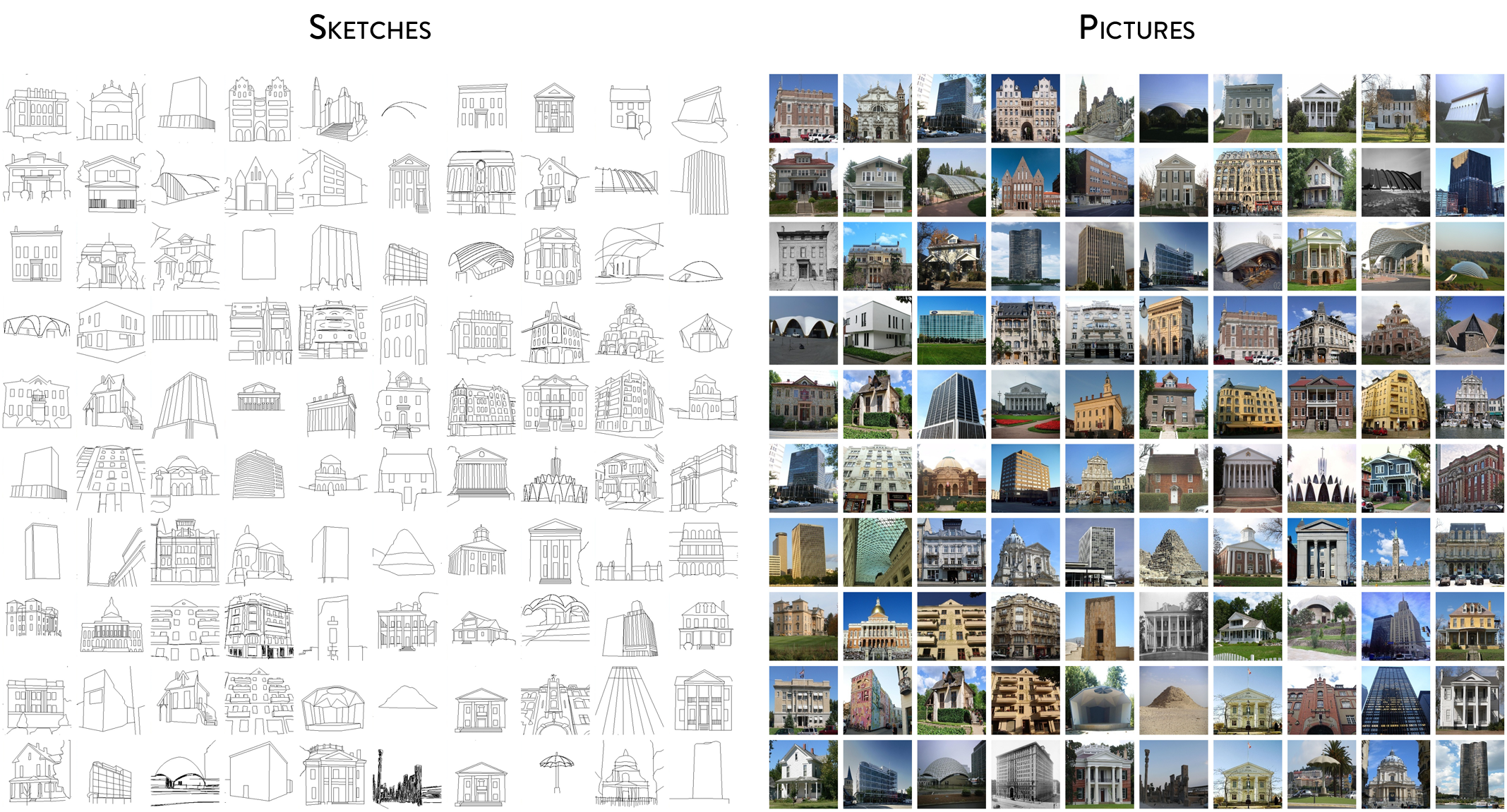

Figure 1. Some of the 1250 pictures populating the dataset with their corresponding hand-drawn sketches.

Figure 2. Diagram of the pix2pix architecture showing the three main components of the model.

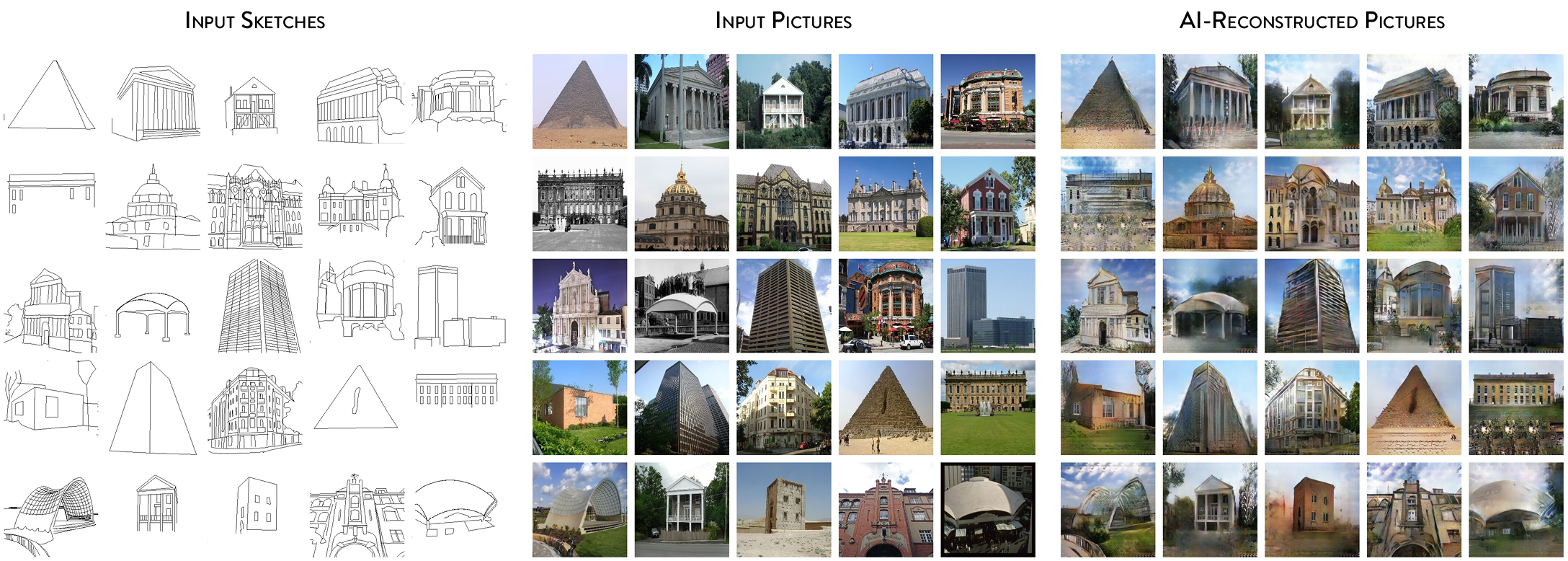

In the early training iterations, the model could reconstruct only coarse structures of the target picture. After a while, however, the reconstruction quality increased, and many photorealistic features emerged. The trained model was then presented with a previously unseen sketch and was asked to perform the translation task based on the experience acquired during training.

Figure 3. reconstruction quality achieved by the model over consecutive training iterations.

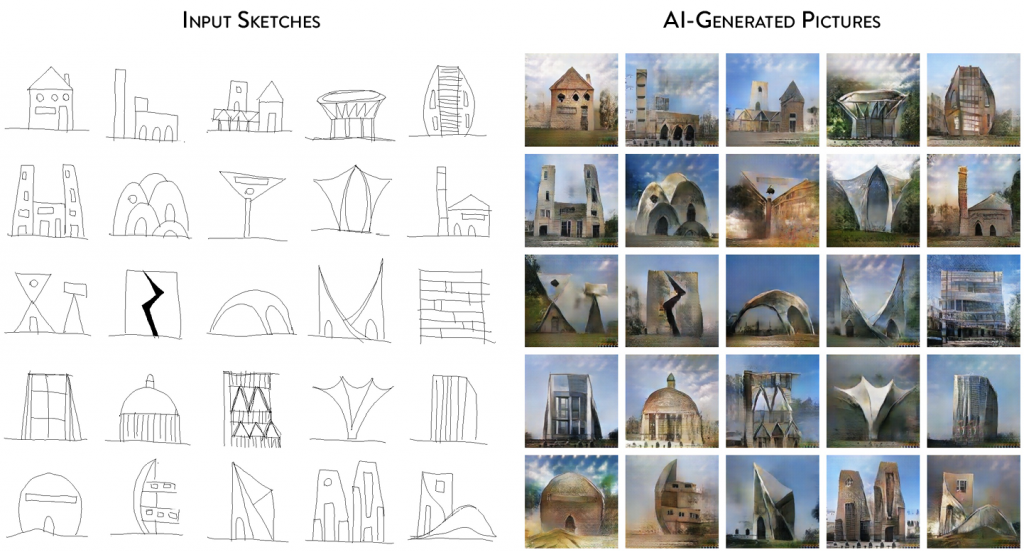

Figure 4. Multiple dataset samples reconstructed by the model after the training process.

Figure 5. Pictures generated by the model starting from new sketches (not included in the dataset).

Dataset

The construction of the dataset followed two sequential stages: (1) collection of pictures of architectural/structural designs, and (2) conversion of such pictures into sketches.

Pictures of architectural designs were retrieved from an existing dataset developed for image classification [5] in which pictures are categorised per architectural style, whereas pictures of structural designs, comprising different structural typologies, were collected from the online catalogue of structurae.net. Data pruning was performed to guarantee a minimum degree of consistency of the dataset. This process consisted of discarding overly distorted pictures and all those representing aerial view and details. After pruning, the total number of data samples populating the dataset was approximatively 1250.

Although many strategies exist for the conversion of pictures into sketch-like images (such as the edges extraction algorithms implemented in computer vision libraries like OpenCV, or the more advanced Holistically-Nested Edge Detection based on AI itself), none of these returned results comparable with hand-drawn sketches. Therefore, sketches were drawn manually for every picture populating the dataset. Four people contributed to the production of sketches using different styles of representation, including ‘free-hand’ and ‘geometric’. The reproduction accuracy also varied from very detailed to extremely simplified. All of this dramatically increased the variance of the dataset but also allowed the AI model to deal with different inputs, and thus to adapt to the style of representation chosen by the designer.

Interface

The model described above was endowed with a GUI to allow the exchange of graphical informal information between humans and AI. The interface consists of two separate screens.

The first one shows two different canvas, one for sketching and another one for the visualisation of the AI-generated pictures. A set of buttons is also included to access the drawing functionalities and pre-load some of the sketches used for training.

The second screen is used to visualise the internal layers of the models. The scope of visualisation is helping the designer familiarise with AI technology by unveiling the black box of its internal processes. Moreover, as the AI model simulates some aspects of human cognition, a look inside the black box of AI also means visualising a simplified version of the human mental processes. Therefore, learning about AI is an opportunity for humans to learn more about themselves.

Figure 6. The first screen of the interface. It allows the user to draw on a canvas and interact with the AI model in real-time.

Figure 7. The second screen of the interface. It allows visualising the internal layers of the AI model.

The described system was presented at the Future Prototyping Exhibition, which was held at the Melbourne School of Design in March 2020. The software ran on a desktop computer, while the interface was used on a touch screen. Despite the overall interest for the system, especially owing to its interactive nature, users struggled to grasp the intentions behind its development. This suggests that the format of the presentation was unsuccessful. A possible explanation for this is that the activity of sketching is always triggered by a design intention. Such an intention does not come from anywhere: it is the result of a design brief. Therefore, most users of Sketches of Thought approached the system by producing sketches of random forms which could never be interpreted meaningfully by an AI model that is trained exclusively with pictures of architectural/structural designs.

Figure 8. Overview of the Future Prototyping Exhibition at the Melbourne School of Design (March 2020).

Conclusions

At its current stage, Sketches Of Thought is far from being a true Intelligent CAD System. The limitations of the scope of this prototype led to a very primitive form of Artificial Design Intelligence, which was capable of dealing with only graphical information (in the form of sketches and pictures) related to a very narrow knowledge domain. For this reason, the actual possibility of using this system to enhance the designer’s creativity is highly speculative.

Despite these limitations, Sketches Of Thought demonstrated some interesting features, which suggests directions for the research on AI in design. First, the system is based on a form of human-machine interaction that promotes exploration rather than fixation of design ideas. On the one hand, the designer does not need to explicitly state the design problem (as required by current computational design approaches) but is encouraged to explore his idea through sketches at the very first interaction with the system. On the other hand, the machine does not need instructions to provide feedback but can rely on its own experience.

Second, the interactive nature of the system encourages playfulness, which is deemed to be a key feature of creative exploration. Indeed, users of the system are often curious about how an abstract sketch would be transformed by the AI. Although this process may be seen as pointless, I strongly believe that, when a design intention exists, it can lead to the emergence of a creative idea. Indeed, it is worth noting that the AI will always interpret the sketch based on its experience, and thus generate images that have at least some recognisable architectural features. Once interpreted by the designer, such images can redirect the exploration process towards new and unexpected directions.

Are you still sceptic about the potential of AI in design? Me too. Yet, I believe that is now time to formulate and test hypothesis on this matter. The technology is mature enough, but still not fully explored. I encourage every researcher in this field to stop talking about ‘post-humanism’ and ‘machines hallucinations’, and start thinking seriously about the potential of AI. This technology can mimic some mechanisms of human cognition, and should not be used to generate fancy images. A better way of testing AI in design is to address the problem of human-machine interaction, and the benefits it could have on the design process. This, by the way, was also the aim of Sketchpad (Sutherland [6]), the very first CAD system, as well as the very first Graphical User Interface.

Acknowledgements

The construction of the dataset used in this experiment was possible thanks to the collaboration of Sabrina Pugnale and Teresa Mandanici.

References

- Goel V., Sketches of Thought. The MIT Press. 1995.

- Duffy A., Persidis A., and MacCallum K.J., NODES: a numerical and object based modelling system for conceptual engineering design.Knowledge-Based Systems, 1996. 9(3): p. 183-206.

- Lawson B., What Designers Know. Taylor & Francis. 2004.

- Isola P., et al, Image-to-image translation with conditional adversarial networks. in Proceedings of the IEEE conference on computer vision and pattern recognition. 2017.

- Xu Z., et al, Architectural Style Classification Using Multinomial Latent Logistic Regression. in ECCV. 2014.

- Sutherland I.E., Sketchpad: a man-machine graphical communication system. 1963.